Exploring the integration of audiovisual information in the primate amygdala and adjacent regions

Humans and other primates can jointly make sense of different types of sensory information, including sounds, smells, shapes and so on. By integrating sensory stimuli in the brain, they can better understand the world around them, detecting potential threats, food and other objects that are crucial to their survival.

Researchers at Shenzhen Institute of Advanced Technology and the Chinese Academy of Sciences have recently carried out a study investigating the neural processes underpinning the integration of sounds and visual stimuli in the macaque brain. Their paper, published in the Neuroscience Bulletin, specifically looked at neural processes in the amygdala, the brain region responsible for processing threats and regulating emotional responses, as well as other regions connected to it.

“In humans and other primate species, combining information from different sensory modalities (e.g., visual, auditory and olfactory) plays an important role in social communication and is critical for survival,” Ji Dai, one of the researchers who carried out the study, told Medical Xpress. “For example, the co-occurrence of a sound enhances the human detection sensitivity for low-intensity visual targets. The neural mechanism underlying such a phenomenon, namely multi-sensory integration, has been a hot topic for research in the past few decades.”

Most past studies investigating multi-sensory integration in the primate brain specifically looked at brain cortices (i.e., regions in the brain’s outer layer, known as the cerebral cortex), such as the superior temporal sulcus. The role of the amygdala and its adjacent regions in multi-sensory integration, on the other hand, have so far been rarely explored.

Dai and his colleagues hoped to fill this gap in the literature, by specifically examining neural processes in the amygdala and its surrounding areas while macaques are integrating sounds and visual inputs in their environment. The researchers wished to determine whether some specific sub-regions of the amygdala play a greater role in AV integration and how their involvement unfolds in the brain.

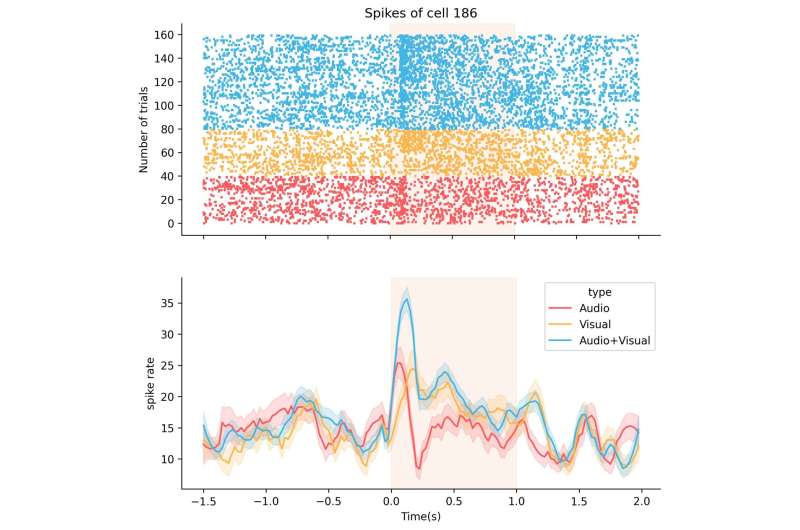

“We used a semi-chronic multi-electrode array (meaning that each electrode in the array is adjustable in recording depth even after implantation) and recorded more than 1,000 neurons from a broad area peri the amygdala in monkeys while presenting them with audio looming, audio receding, visual looming, visual receding stimuli, and the combination of two sensory inputs,” Dai explained. “After an initial evaluation of neural responses, we found that 332 neurons responded to one or more sensory stimuli (auditory, visual or audiovisual).”

After collecting their data in experiments with macaques, Dai and his colleagues analyzed it using a combination of classical statistical methods and machine learning techniques. Through these analyses, they were ultimately able unveil the different response patterns of neurons in the amygdala and adjacent regions that occurred while the primates were presented with audiovisual stimuli.

“We classified neurons into four types based on their response patterns and localized their regional origins,” Dai said. “Using machine learning-based hierarchical clustering, we further clustered neurons into five groups and associated them with different integrating functions and sub-regions. This allowed us to identify regions distinguishing congruent and incongruent bimodal sensory inputs.”

The results gathered by this team of researchers shed some new light on the involvement of the amygdala and nearby regions in the integration of audio and visual stimuli. Overall, they suggest that peri-amygdala regions are also crucial to audiovisual integration, while also identifying cell types that could play a greater role in multi-sensory integration.

“Our study also showcases the efficiency of the semi-chronic electrode array in recording a large neuron population from deep structures in primates and demonstrates the power of data-driven approaches in analyzing high-dimension electrophysiological data,” Dai added. “An intriguing direction for future research might be to develop theoretical models for multi-sensory integration based on our neurophysiology findings.”

More information:

Liang Shan et al, Neural Integration of Audiovisual Sensory Inputs in Macaque Amygdala and Adjacent Regions, Neuroscience Bulletin (2023). DOI: 10.1007/s12264-023-01043-8

© 2023 Science X Network

Source: Read Full Article