3-D holographic microscopy powered by deep-learning deciphers cancer immunotherapy

Live tracking and analyzing of the dynamics of chimeric antigen receptor (CAR) T-cells targeting cancer cells can open new avenues for the development of cancer immunotherapy. However, imaging via conventional microscopy approaches can result in cellular damage, and assessments of cell-to-cell interactions are extremely difficult and labor-intensive. When researchers applied deep learning and 3-D holographic microscopy to the task, however, they not only avoided these difficultues but found that AI was better at it than humans were.

Artificial intelligence (AI) is helping researchers decipher images from a new holographic microscopy technique needed to investigate a key process in cancer immunotherapy ‘live’ as it takes place. The AI transformed work that, if performed manually by scientists, would otherwise be incredibly labor-intensive and time-consuming into one that is not only effortless but done better than they could have done it themselves. The research, conducted by scientists at KAIST, appeared in the journal eLife last December.

A critical stage in the development of the human immune system’s ability to respond not just generally to any invader (such as pathogens or cancer cells) but specifically to that particular type of invader and remember it should it attempt to invade again is the formation of a junction between an immune cell called a T-cell and a cell that presents the antigen, or part of the invader that is causing the problem, to it. This process is like when a picture of a suspect is sent to a police car so that the officers can recognize the criminal they are trying to track down. The junction between the two cells, called the immunological synapse, or IS, is the key process in teaching the immune system how to recognize a specific type of invader.

Since the formation of the IS junction is such a critical step for the initiation of an antigen-specific immune response, various techniques allowing researchers to observe the process as it happens have been used to study its dynamics. Most of these live imaging techniques rely on fluorescence microscopy, where genetic tweaking causes part of a protein from a cell to fluoresce, in turn allowing the subject to be tracked via fluorescence rather than via the reflected light used in many conventional microscopy techniques.

However, fluorescence-based imaging can suffer from effects such as photo-bleaching and photo-toxicity, preventing the assessment of dynamic changes in the IS junction process over the long term. Fluorescence-based imaging still involves illumination, whereupon the fluorophores (chemical compounds that cause the fluorescence) emit light of a different color. Photo-bleaching or photo-toxicity occur when the subject is exposed to too much illumination, resulting in chemical alteration or cellular damage.

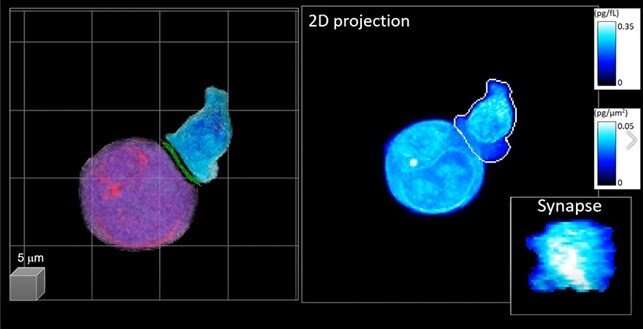

One recent option that does away with fluorescent labeling and thereby avoids such problems is 3-D holographic microscopy or holotomography (HT). In this technique, the refractive index (the way that light changes direction when encountering a substance with a different density—why a straw looks like it bends in a glass of water) is recorded in 3-D as a hologram.

Until now, HT has been used to study single cells, but never cell-cell interactions involved in immune responses. One of the main reasons is the difficulty of “segmentation,” or distinguishing the different parts of a cell and thus distinguishing between the interacting cells; in other words, deciphering which part belongs to which cell.

Manual segmentation, or marking out the different parts manually, is one option, but it is difficult and time-consuming, especially in three dimensions. To overcome this problem, automatic segmentation has been developed in which simple computer algorithms perform the identification.

“But these basic algorithms often make mistakes,” explained Professor YongKeun Park from the Department of Physics, “particularly with respect to adjoining segmentation, which of course is exactly what is occurring here in the immune response we’re most interested in.”

So, the researchers applied a deep learning framework to the HT segmentation problem. Deep learning is a type of machine learning in which artificial neural networks based on the human brain recognize patterns in a way that is similar to how humans do this. Regular machine learning requires data as an input that has already been labeled. The AI “learns” by understanding the labeled data and then recognizes the concept that has been labeled when it is fed novel data. For example, AI trained on a thousand images of cats labeled ‘cat’ should be able to recognize a cat the next time it encounters an image with a cat in it. Deep learning involves multiple layers of artificial neural networks attacking much larger, but unlabeled datasets, in which the AI develops its own ‘labels’ for concepts it encounters.

In essence, the deep learning framework that KAIST researchers developed, called DeepIS, came up with its own concepts by which it distinguishes the different parts of the IS junction process. To validate this method, the research team applied it to the dynamics of a particular IS junction formed between chimeric antigen receptor (CAR) T-cells and target cancer cells. They then compared the results to what they would normally have done: the laborious process of performing the segmentation manually. They found not only that DeepIS was able to define areas within the IS with high accuracy, but that the technique was even able to capture information about the total distribution of proteins within the IS that may not have been easily measured using conventional techniques.

“In addition to allowing us to avoid the drudgery of manual segmentation and the problems of photo-bleaching and photo-toxicity, we found that the AI actually did a better job,” Park added.

Source: Read Full Article