New tech assigns more accurate ‘time of death’ to cells

It’s surprisingly hard to tell when a brain cell is dead. Neurons that appear inactive and fragmented under the microscope can persist in a kind of life-or-death limbo for days, and some suddenly begin signaling again after appearing inert. For researchers who study neurodegeneration, this lack of a precise “time of death” declaration for neurons makes it hard to pin down what factors lead to cell death and to screen drugs that might save aging cells from dying.

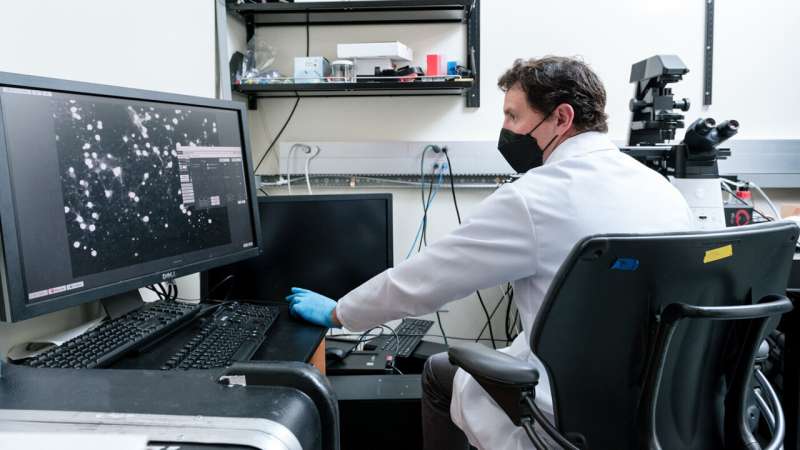

Now, researchers at Gladstone Institutes have developed a new technology that lets them track thousands of cells at a time and determine the precise moment of death for any cell in the group. The team showed, in a paper published in the journal Nature Communications, that the approach works in rodent and human cells as well as within live zebrafish, and can be used to follow the cells over a period of weeks to months.

“Getting a precise time of death is very important for unraveling cause and effect in neurodegenerative diseases,” says Steve Finkbeiner, MD, Ph.D., director of the Center for Systems and Therapeutics at Gladstone and senior author of both new studies. “It lets us figure out which factors are directly causing cell death, which are incidental, and which might be coping mechanisms that delay death.”

In a companion paper published in the journal Science Advances, the researchers combined the cell sensor technology with a machine learning approach, teaching a computer how to distinguish live and dead cells 100 times faster and more accurately than a human.

“It took college students months to analyze these kind of data by hand, and our new system is nearly instantaneous—it actually runs faster than we can acquire new images on the microscope,” says Jeremy Linsley, Ph.D., a scientific program leader in Finkbeiner’s lab and the first author of both new papers.

Teaching an Old Sensor New Tricks

When cells die—whatever the cause or mechanism—they eventually become fragmented and their membranes degenerate. But this degradation process takes time, making it difficult for scientists to distinguish between cells that have long since stopped functioning, those that are sick and dying, and those that are healthy.

Researchers typically use fluorescent tags or dyes to follow diseased cells with a microscope over time and try to diagnose where they are within this degradation process. Many indicator dyes, stains, and labels have been developed to distinguish the already dead cells from those that are still alive, but they often only work over short periods of time before fading and can also be toxic to the cells when they are applied.

“We really wanted an indicator that lasts for a cell’s whole lifetime—not just a few hours—and then gives a clear signal only after the specific moment the cell dies,” says Linsley.

Linsley, Finkbeiner, and their colleagues co-opted calcium sensors, originally designed to track levels of calcium inside a cell. As a cell dies and its membranes become leaky, one side effect is that calcium rushes into the cell’s watery cytosol, which normally has relatively low levels of calcium.

So, Linsley engineered the calcium sensors to reside in the cytosol, where they would fluoresce only when calcium levels increased to a level that indicates cell death. The new sensors, known as genetically encoded death indicator (GEDI, pronounced like Jedi in Star Wars), could be inserted into any type of cell and signal that the cell is alive or dead over the cell’s entire lifetime.

To test the utility of the redesigned sensors, the group placed large groups of neurons—each containing GEDI—under the microscope. After visualizing more than a million cells, in some cases prone to neurodegeneration and in others exposed to toxic compounds, the researchers found that the GEDI sensor was far more accurate than other cell death indicators: there wasn’t a single case where the sensor was activated and a cell remained alive. Moreover, in addition to that accuracy, GEDI also seemed to detect cell death at an earlier stage than previous methods—close to the “point of no return” for cell death.

“This allows you to separate live and dead cells in a way that’s never been possible before,” says Linsley.

Superhuman Death Detection

Linsley mentioned GEDI to his brother—Drew Linsley, Ph.D., an assistant professor at Brown University who specializes in applying artificial intelligence to large-scale biological data. His brother suggested that the researchers use the sensor, coupled with a machine learning approach, to teach a computer system to recognize live and dead brain cells based only on the form of the cell.

The team coupled results from the new sensor with standard fluorescence data on the same neurons, and they taught a computer model, called BO-CNN, to recognize the typical fluorescence patterns associated with what dying cells look like. The model, the Linsley brothers showed, was 96 percent accurate and better than what human observers can do, and was more than 100 times faster than previous methods of differentiating live and dead cells.

“For some cell types, it’s extremely difficult for a person to pick up on whether a cell is alive or dead—but our computer model, by learning from GEDI, was able to differentiate them based on parts of the images we had not previously known were helpful in distinguishing live and dead cells,” says Jeremy Linsley.

Both GEDI and BO-CNN will now allow the researchers to carry out new, high-throughput studies to discover when and where brain cells die—a very important endpoint for some of the most important diseases. They can also screen drugs for their ability to delay or avoid cell death in neurodegenerative diseases. Or, in the case of cancer, they can search for drugs that hasten the death of diseased cells.

Source: Read Full Article