A NEAT reduction of complex neuronal models accelerates brain research

Neurons, the fundamental units of the brain, are complex computers by themselves. They receive input signals on a tree-like structure—the dendrite. This structure does more than simply collect the input signals: it integrates and compares them to find those special combinations that are important for the neurons’ role in the brain. Moreover, the dendrites of neurons come in a variety of shapes and forms, indicating that distinct neurons may have separate roles in the brain.

A simple yet faithful model

In neuroscience, there has historically been a tradeoff between a model’s faithfulness to the underlying biological neuron and its complexity. Neuroscientists have constructed detailed computational models of many different types of dendrites. These models mimic the behavior of real dendrites to a high degree of accuracy. The tradeoff, however, is that such models are very complex. Thus, it is hard to exhaustively characterize all possible responses of such models and to simulate them on a computer. Even the most powerful computers can only simulate a small fraction of the neurons in any given brain area.

Researchers from the Department of Physiology at the University of Bern have long sought to understand the role of dendrites in computations carried out by the brain. On the one hand, they have constructed detailed models of dendrites from experimental measurements, and on the other hand they have constructed neural network models with highly abstract dendrites to learn computations such as object recognition. A new study set out to find a computational method to make highly detailed models of neurons simpler, while retaining a high degree of faithfulness. This work emerged from the collaboration between experimental and computational neuroscientists from the research groups of Prof. Thomas Nevian and Prof. Walter Senn, and was led by Dr. Willem Wybo. “We wanted the method to be flexible, so that it could be applied to all types of dendrites. We also wanted it to be accurate, so that it could faithfully capture the most important functions of any given dendrite. With these simpler models, neural responses can more easily be characterized and simulation of large networks of neurons with dendrites can be conducted,” Dr. Wybo explains.

This new approach exploits an elegant mathematical relation between the responses of detailed dendrite models and of simplified dendrite models. Due to this mathematical relation, the objective that is optimized is linear in the parameters of the simplified model. “This crucial observation allowed us to use the well-known linear least squares method to find the optimized parameters. This method is very efficient compared to methods that use non-linear parameter searches, but also achieves a high degree of accuracy,” says Prof. Senn.

Tools available for AI applications

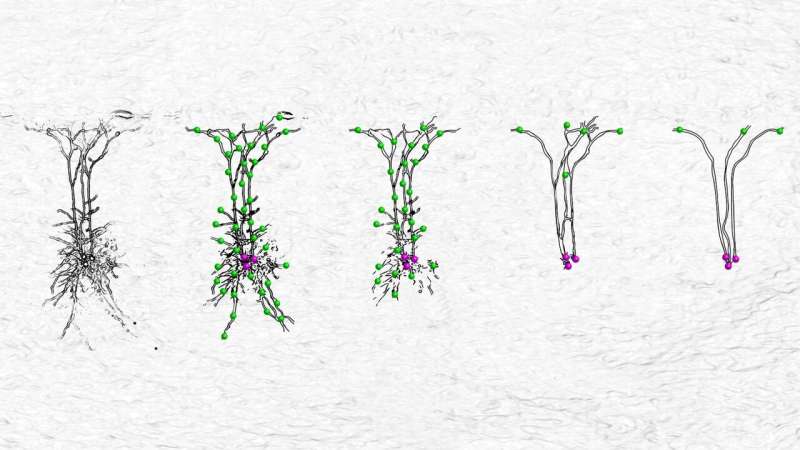

The main result of the work is the methodology itself: a flexible yet accurate way to construct reduced neuron models from experimental data and morphological reconstructions. “Our methodology shatters the perceived tradeoff between faithfulness and complexity, by showing that extremely simplified models can still capture much of the important response properties of real biological neurons,” Prof. Senn explains. “Which also provides insight into ‘the essential dendrite’, the simplest possible dendrite model that still captures all possible responses of the real dendrite from which it is derived,” Dr. Wybo adds.

Thus, in specific situations, hard bounds can be established on how much a dendrite can be simplified, while retaining its important response properties. “Furthermore, our methodology greatly simplifies deriving neuron models directly from experimental data,” Prof. Senn highlights, who is also a member of the steering committe of the Center for Artifical Intelligence (CAIM) of the University of Bern. The methodology has been compiled into NEAT (NEural Analysis Toolkit) – an open-source software toolbox that automatizes the simplification process. NEAT is publicly available on GitHub.

Source: Read Full Article